How I Ship Software

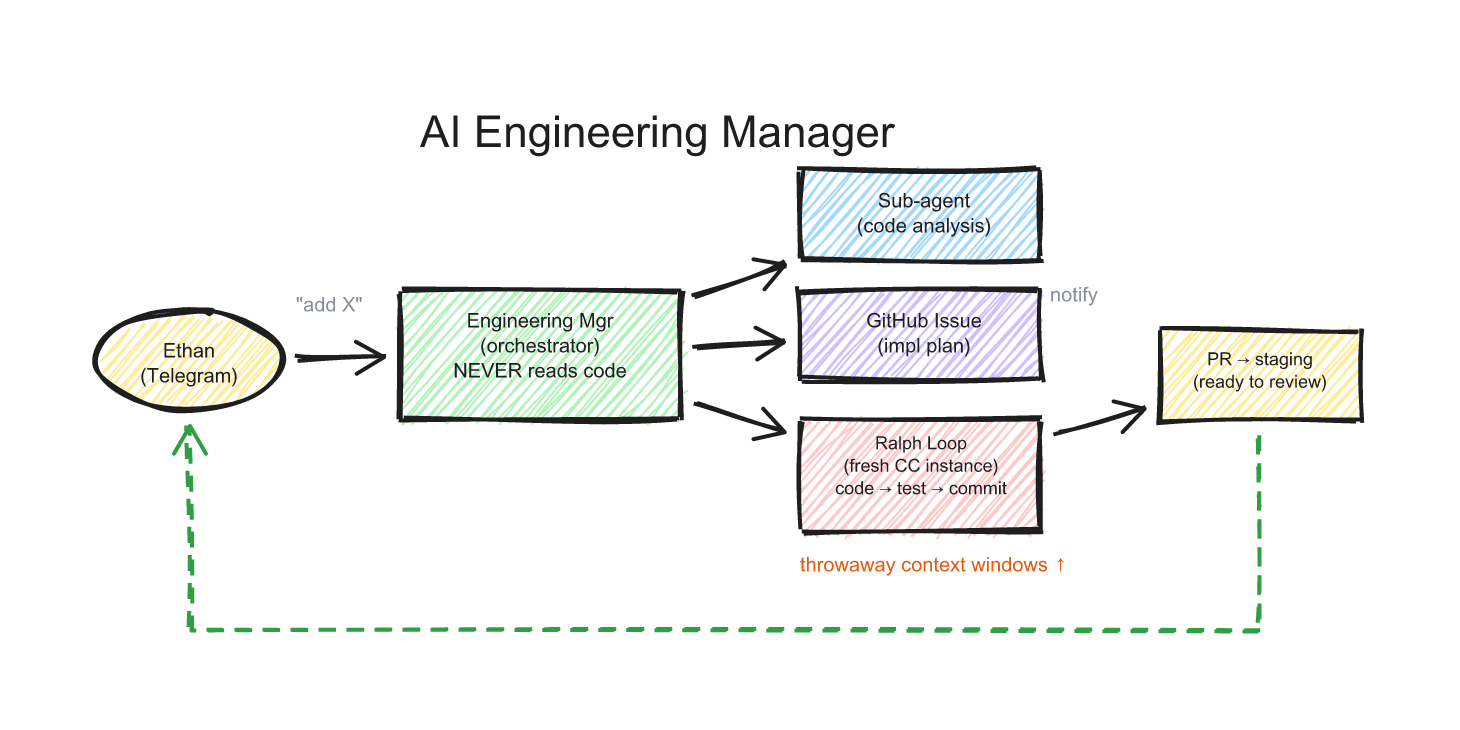

how i develop with OpenClaw. text a feature, review the plan, ship the PR.

i text "add dark mode to the settings page" on Telegram. twenty minutes later there's a PR on staging with tests passing. i never opened a laptop.

that's the whole workflow. a feature idea arrives as a message, and the agent handles everything between that message and a reviewable pull request. no IDE, no terminal, no context switching. just me on my couch texting my engineering team, except the team is one agent.

the context-aware PRD skill

this is the most important part of the whole system.

i built a custom OpenClaw skill called create-issue. when i type /create-issue add dark mode to settings, the orchestrator agent does NOT read the codebase. it doesn't touch a single file. instead, it spawns a sub-agent with a throwaway context window and says: "go analyze the codebase, figure out what needs to change, and come back with a plan."

the sub-agent reads the relevant files, maps the architecture, identifies where changes need to happen, and creates a detailed GitHub issue. not a vague ticket. a real PRD: which files to modify, what the expected behavior is, edge cases to handle, how to test it. the kind of plan a senior engineer would write after spending 30 minutes reading the code.

then the sub-agent throws its context away. gone. the orchestrator never saw a line of code, and the codebase analysis doesn't pollute the main session. this is critical. the moment you load source files into the manager's context, it starts degrading. code is noisy, verbose, and eats tokens fast.

why the plan is everything

i review the GitHub issue before any code gets written. this is the human-in-the-loop moment that actually matters.

when the AI produces bad code, it's almost always because the plan was vague. "add dark mode" produces garbage. but a PRD that says "add a dark mode toggle to the settings page that persists to localStorage, respects system preference on first load, and applies a .dark class to the body element" produces something you can ship.

the skill asks clarifying questions too. "dark mode everywhere or just settings? toggle or dropdown? should it respect system preference?" two or three messages, enough to remove ambiguity. then it writes the plan.

the real skill in working with AI: directing it well. when something goes wrong, the first question isn't "why is the AI bad?" it's "what did i fail to specify?"

implementation: ralph loops

once i approve the plan, the agent spawns an isolated Claude Code session to implement it. this uses a pattern called Ralph Loops: a fresh instance gets spun up for each task. completely clean context, no accumulated history, no confusion from previous work.

the instance reads the GitHub issue, writes code, runs the test suite, and if tests pass, commits. if tests fail, it reads the failure, fixes, and tries again. fresh context per task, external verification, commit on green, repeat.

this solves context rot. when a single AI session works on a codebase for hours, it starts hallucinating about code it saw 40 minutes ago. functions that were refactored, variables that were renamed. Ralph Loops sidestep this entirely. every iteration starts clean. the tests are the source of truth, not the AI's memory.

the architecture

three layers, completely separated:

1. orchestrator (my OpenClaw agent). handles intent, planning, coordination, and communication. never reads code. this is the engineering manager. it talks to me, delegates work, and reviews results.

2. analysis sub-agent (throwaway). reads the codebase, creates the PRD as a GitHub issue, then its context gets discarded. this is the staff engineer who does the deep dive and writes the spec.

3. implementation sub-agent (Ralph Loop). fresh Claude Code instance that implements the plan from the GitHub issue. writes code, runs tests, opens a PR. this is the engineer who writes the code.

the orchestrator stays sharp because it only handles high-level decisions. the sub-agents do the heavy lifting in isolated contexts that get thrown away. this is the same pattern as House Agents but applied to an entire development workflow.

compound engineering

the agent also maintains a CLAUDE.md memory file that it updates after every task. what went well, what failed, patterns to avoid, codebase-specific quirks. this is compound engineering, each unit of work makes the next one better. the agent gets sharper over time because it's literally writing down what it learned.

why it matters

a single person can ship real features to real products from the couch. the feedback loop is: text an idea → review the plan on GitHub → approve → PR lands on staging. the human stays in the loop at the plan, not the code. that's the whole pattern.

this is how personal projects actually get maintained. not weekend coding sprints that never happen, but 30-second Telegram messages that turn into shipped features by the time you finish your coffee.

how could AI do this?